An Analysis of Aggression Dynamics and Behavior Forecasting in Temporal Social Network

Hate speeches are more common than we may think. It is evident from the fact that in April 2022 itself Facebook identified over 53000 hate speech and Instagram acted on 77000 such cases. These figures may not include covert cases. Since its widespread availability, the digital landscape has become a breeding ground for aggressive behavior, posing a significant threat to digital well-being and social harmony.

Cyber-aggression, however, is more than hate speeches. Online aggression encompasses a wide spectrum of hostile actions, from cyberbullying and harassment to the spread of offensive and hateful content. The following wheel can give us a better understanding.

We developed a comprehensive definition of cyber-aggression from different literature of various field of study like sociology, psychology, computer science and other.

Cyber-aggression refers to harmful intentional online behavior, irrespective of whether it is overt or covert. It includes the use of hostile, offensive, or abusive language, insults, threats, and abusive comments intended to cause discomfort, distress, or harm to individuals or communities. (Mane et al. (2025))

There has been work on hate speech detection, however, from the definition we can understand that the detection of content is just one part of a wide-range of issues with the cyber-aggression. Recognizing the urgency of this issue, our research group, SoNAA, embarked on a dedicated journey in 2020 to understand, detect, and ultimately forecast aggressive behavior in online social environments. This article chronicles our research efforts, beginning with multilingual cyberbully detection, extending to large-scale empirical analysis of aggressive content, and culminating in the development of a novel forecasting framework, the Temporal Social Graph Attention Network (TSGAN). Our work provides a comprehensive perspective on online aggression, integrating content-based analysis with behavioral understanding and predictive modeling.

Chapter 1: Laying the Groundwork - Multilingual Cyberbully Detection (Karan and Kundu (2023))

Our initial attempt into the sphere of online aggression, documented in the PReMI 2023 paper, focused on the critical task of identifying potential bullies and their targets within the multilingual Indian Twitter-o-sphere. This early work recognized the linguistic diversity of online interactions in India and aimed to develop an end-to-end solution capable of handling multiple languages without relying on translation.

We proposed a pipeline architecture utilizing two Long Short-Term Memory (LSTM) based classifiers. The first LSTM model was trained for language detection, enabling the system to automatically identify the language of a given tweet. Subsequently, based on the detected language, the tweet was passed to a language-specific aggression detection LSTM classifier. We trained four distinct aggression detection models for Hindi, English, Bengali, and Hinglish (Hindi written in Roman script). The training data for these models comprised over 150,000 tweets. For aggression detection, we utilized and adapted the TRAC datasets, converting the labels into a binary classification of aggressive (combining Openly Aggressive and Covertly Aggressive) and non-aggressive.

The multilingual approach proved effective, with the language-specific LSTM-based aggression detectors achieving promising F1 scores: 0.73 for English, 0.83 for Hindi, 0.69 for Bengali, and an impressive 0.91 for Hinglish. Beyond detection, this study also ventured into user profiling, analyzing various attributes of potential bully and target users using Twitter’s public data. We reported interesting patterns related to follower counts, friends counts, tweet frequencies, and the percentage of aggressive tweets associated with these user categories. One notable observation was that users mentioned in bully posts also exhibited aggressive behavior in their own tweets, hinting at the potentially reciprocal and contagious nature of online aggression. This observation laid the foundation for our subsequent investigations into the dynamics of aggression.

Chapter 2: Comprehensive Survey on Cyber-Aggression (Mane et al. (2025))

Once the opportunity and research questions were clear, we started digging into the literature more to find if solutions to the questions were invented before or not. This results into a survey paper at the prestigious ACM Computing Survey with the title “A Survey on Online Aggression: Content Detection and Behavioral Analysis on Social Media”. The paper systematically reviews research on aggression content detection and behavioral analysis on social media platforms. The paper addresses the increasing issue of cyber-aggressive behavior and its societal risks. It offers a unified and comprehensive definition of cyber-aggression as stated before.

The survey thoroughly examines the process of aggression content detection, including dataset creation, feature selection and extraction (such as stylistic, sentiment, and syntactic features, as well as text representations like FastText and GloVe), and the development of detection algorithms ranging from traditional machine learning to deep learning and transformer-based models like BERT. It highlights the prevalence of English language datasets and research in this area.

Furthermore, the paper reviews studies focused on the behavioral analysis of aggression, exploring the influencing factors, consequences, and patterns associated with cyber-aggressive behavior. It emphasizes the significance of integrating sociological insights with computational techniques to develop more effective prevention systems for cyber-aggression.

The survey identifies a notable gap in integrating aggression content detection and behavioral analysis and aims to bridge this divide to achieve a more holistic understanding of online aggression. Finally, the paper concludes by highlighting research challenges and directions for future work, advocating for a socio-computational approach to address cyber-aggressive behavior.

Chapter 3: Empirical Evidences (Mane et al. (2025))

Building upon the initial success of multilingual aggression detection, our research progressed towards a large-scale empirical analysis of aggressive content, detailed in our Applied Intelligence paper. This phase aimed to gain deeper insights into the factors influencing aggressive behavior by analyzing a substantial volume of data.

Data Collection and Annotation

We collected a large English Twitter Aggression dataset. The data crawling spanned from January 1, 2022, to July 15, 2022, and was initiated using hashtags from three offline “seed” events across political, social, and religious categories. We then collected all posts from users who engaged with these seed events, as well as posts from their followers, and following users, resulting in a dataset of 14 million English tweets and 63,000 users. Importantly, this dataset included not only content directly related to the seed events but also posts on various other topics within the same timeframe. A subset of this dataset was manually annotated for aggression with a substantial agreement score of 0.78 (Krippendorff’s alpha). We call this annotated dataset as TAG-EN. This rigorous annotation process ensured the quality of our ground truth data.

Data Collection Pipeline

Data Annotation Pipeline

Aggression Detection Model and User Aggression Intensity

Leveraging this newly created dataset, we developed an aggression detection model by fine-tuning RoBERTa, a pre-trained transformer model known for its robust performance in natural language understanding tasks. Furthermore, to quantify the level of aggression exhibited by individual users, we proposed a novel “user aggression intensity” metric. This metric allowed us to move beyond binary classification and gain a more nuanced understanding of user behavior.

Overall Pipeline of the Research

Key Understanding

The empirical analysis on this Twitter data provided very interesting findings. The key understanding are:

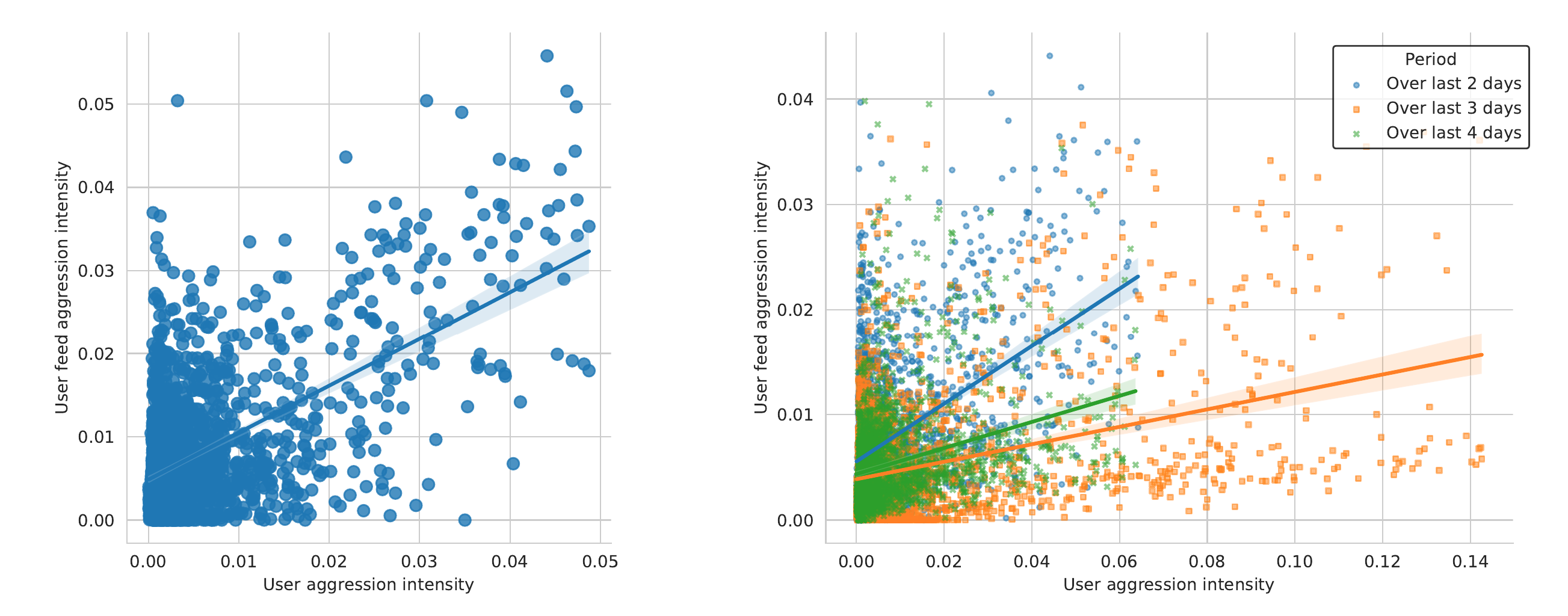

- Finding 1. User intensity and their feed intensity are correlated and short-term

Correlation of User Intencity with Feed Intensity (Correlation 0.58 (moderately strong correlation))

- Finding 2. Feed aggression intensity of aggressive posts is higher than that of non-aggressive posts.

Ratio of Aggressive posts after exposing to Aggressive feeds in last 24 hrs.

- Finding 3. Event aggressive post has a higher event feed aggressive intensity..

Ratio of Aggressive posts on a specific topic after exposing to Aggressive feeds on a specific topic in last 24 hrs.

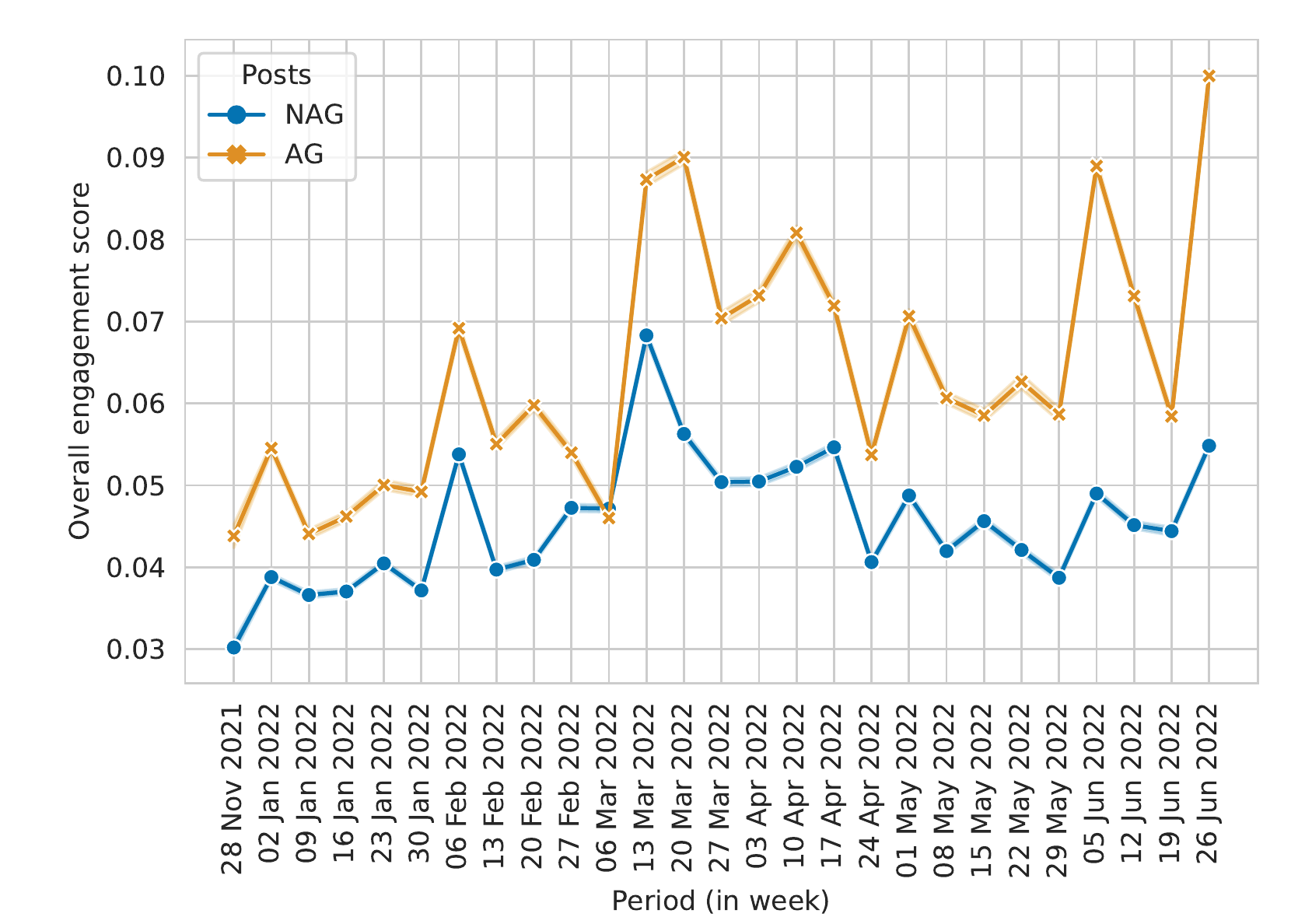

- Finding 4. Aggressive posts had a higher engagement score than non-aggressive posts..

User Engagement with Aggressive and Non-Aggressive Posts.

Chapter 4: Predicting the Future - Temporal Social Graph Attention Network (TSGAN) (Mane et al. (2025))

Recognizing that understanding and detecting aggression are crucial first steps, our research advanced to the more challenging problem of forecasting individual user aggression in dynamic social networks, as presented in the AAAI 2025 paper. Existing research had primarily focused on modeling the detection of aggressive content rather than the diffusion in the temporal network. TSGAN is designed to fill this critical gap.

TSGAN Architecture

TSGAN is a social-aware sequence-to-sequence architecture specifically designed to forecast aggressive behavior in social networks. Its core innovation lies in an adaptive socio-temporal attention module (ASTAM) that dynamically models both social influence and temporal dynamics. To capture the broader network context and global social influence, TSGAN employs a graph contrastive learning approach to generate global network context embeddings (GNCE). Specifically, it utilizes Bootstrapped Graph Latents (BGRL) to encode nodes into vector representations, preserving both local and global network structure information. These pre-computed GNCEs are then integrated into TSGAN.

TSGAN also uniquely addresses several key aspects of online social behavior:

- User inactivity: The model accounts for periods when users are not actively posting.

- Dynamic strength follower relationships: It models how changes in strength of follower relationship can impact user behavior.

- Temporal behavioral decay: TSGAN incorporates the idea that past behavior’s influence may diminish over time.

The core of TSGAN lies in its Adaptive Socio-Temporal Attention Module (ASTAM). ASTAM is designed to jointly model dynamic social influence through active incoming peer interactions and temporal dynamics via decay-aware attention to historical behaviors. It achieves this by employing two parallel attention mechanisms: Social Influence Attention (SIA) and Temporal Dynamics Attention (TDA). SIA dynamically prioritizes actively engaging peers with strong social ties, considering relationship features. TDA, on the other hand, captures dependencies in a user’s past behavior, giving more weight to recent actions while still accounting for longer-term patterns through an exponential decay mechanism. The outputs of SIA and TDA are then adaptively integrated by the Integration Attention Gateway (IAG), allowing the model to balance the influence of social triggers and individual behavioral history. Complementing ASTAM is the Cross-Temporal Attention (CTA), which addresses long-term forecasting by capturing non-linear and time-decoupled dependencies between past and future time steps. CTA allows the model to directly link historical behavior representations to future predictions, enabling it to prioritize distant but influential past behaviors that might not follow linear temporal progressions. Notably, CTA utilizes pre-computed Global Network Context Embedding (GNCE) for future time steps to maintain a realistic forecasting approach. These two modules, ASTAM and CTA, work in concert to enable TSGAN to effectively forecast aggressive behavior by dynamically modeling both short-term socio-temporal influences and long-term dependencies.

Overall architecture is shown bellow.

Hybrid Aggression Content Detection

In the process we also proposed hybrid aggression content detection model. This high-performing model (92.87% F1 score) combines a fine-tuned transformer (AG-BERT) with a large language model (LLM) to accurately quantify user aggression over time. The hybrid approach balances computational efficiency with high accuracy, which is crucial for real-time analysis of large-scale social network data. The content detection model first obtains document embeddings using AG-BERT and retrieves contextually similar documents from the training set. It then constructs a prompt using the input document and these similar examples, prompting LLaMa-2 to predict the final aggression label.

Experimental Evaluation and Results

We rigorously evaluated TSGAN on real-world datasets, including X (formerly Twitter) for aggression forecasting and Flickr for popularity prediction, demonstrating the model’s versatility and effectiveness. TSGAN outperformed a diverse array of baseline methods – ranging from traditional statistical models like Historical Average (HA) and ARIMA to advanced deep learning approaches including RNN, GRU, LSTM, LSTNet, STGCN, and DCRNN. While some graph-based models showed improvements over sequence models, they often struggled to scale to our moderate-size social network. TSGAN achieved state-of-the-art performance in forecasting across hourly, daily, and weekly temporal intervals, showing up to 24.8% improvement in daily aggression predictions. Ablation studies further validated the significant contribution of each submodule within TSGAN.

Overall Contributions and Future Directions

Our research journey, starting with fundamental multilingual detection, progressing to large-scale empirical analysis, and culminating in predictive forecasting, represents a significant stride towards a comprehensive understanding and mitigation of online aggression. We have contributed:

- Novel multilingual and English aggression datasets.

- Effective aggression detection models leveraging LSTMs and transformer architectures including LLM as assistance..

- A “user aggression intensity” metric for nuanced behavioral analysis.

- TSGAN, a novel framework for forecasting individual user aggression in dynamic social networks.

- Insights into the behavioral patterns of aggressive users and the dynamics of aggression.

Looking ahead, our work aligns with several promising future research directions highlighted in the broader literature. These include developing more comprehensive and diverse datasets, effectively integrating multimodal and multilingual data, further leveraging the potential of large language models while ensuring interpretability and fairness, and incorporating sociological insights into computational models. Investigating cross-cultural differences in online aggression and developing robust models that can adapt to evolving language patterns remain critical challenges. Furthermore, focusing on the development and evaluation of effective intervention strategies is a crucial next step in translating our understanding into real-world impact.

Conclusion

The work undertaken by SoNAA since 2020 demonstrates a dedicated and evolving effort to tackle the complex problem of online aggression. From laying the foundation with multilingual detection to conducting in-depth empirical analysis and finally developing a sophisticated forecasting mechanism, our research provides valuable datasets, models, and insights. The development of TSGAN, in particular, marks a significant advancement in our ability to anticipate and potentially mitigate aggressive behavior in online social networks. By continuing to build upon these foundations and addressing the challenges that lie ahead, we aim to contribute meaningfully to creating safer and more harmonious online environments.